Inside Anoki’s Multimodal Hackathon: 8 Hours, 11 Teams, One Clear Mission

We recently cleared the calendars for an intense 8-hour hackathon: 11 teams across product, design, and engineering, one mission, and a massive challenge to push the boundaries of our multimodal AI intelligence layer.

At Anoki, our core engines—ContextIQ and CreativeAI—are designed to deliver the "Right Moment" for CTV advertising by deeply understanding video content. But for this event, we looked beyond the ad break to test the horizontal power of our infrastructure.

We operated on a core thesis: While video is the world’s richest source of truth, the true challenge - and the massive opportunity - lies in operationalizing that intelligence across any industry context.

The prompt was simple:

How might we transform multimodal signals into actionable intelligence?

The results were transformative. The teams built systems that could see, hear, and reason—transforming raw inputs into immediate utility.

From sports analytics and elder care to infrastructure debugging, the hackathon demonstrated how quickly our core video intelligence infrastructure can be shaped to solve specific, real-world problems.

Hackathon Snapshot ⚡

- Format: 8-hour sprint, demo-first

- Teams: 11

- Theme: Multimodal → Intelligence → Action

- Scope: Industry-agnostic: Media, Security, Retail, Education, Sports

The Patterns We Saw

Across 11 projects, the hackathon revealed three fundamental shifts in how we are operationalizing multimodal AI:

1) Chat became the Control Plane (Agents-as-Partners)

We saw a move away from "features" toward autonomous collaborators that execute end-to-end workflows. Whether it was ChatContextIQ creating media plans, the Block System Debugger diagnosing KPI drops, or the PA for Business Analysts running Spark queries—teams proved that agentic chat is the most natural interface for complex data systems.

2) Closed-Loop Systems (Observe → Decide → Act)

The most impactful demos didn’t stop at analysis, they drove decisions and actions. We saw a shift from passive monitoring to active intervention: SeniorCare AI securing privacy by keeping feeds blacked out until a fall is detected, Coach’s Assistant converting live match signals into instant alerts, and NeuroShift correcting cognitive drift in real time.

3) A Composable Video Intelligence Layer

A major takeaway was the horizontal power of our core infrastructure. By leveraging shared multimodal primitives across Anoki ContextIQ and CreativeAI, teams rapidly adapted the same “video brain” to power very different outcomes—ranging from the narrative assembly of the Automatic Highlights Generator to the autonomous production of UGC Ad Creator videos.

What Teams Built

Each team built a working prototype using multimodal AI across real-world use cases.

Automatic Highlights Generator reimagines the fan experience by instantly stitching specific game events into bespoke, multi-language highlight reels.

ChatContextIQ turns simple prompts into strategic media plans. It unlocks deep video intelligence through natural language!

SeniorCare AI uses privacy-masked computer vision to detect falls and track gait health, unlocking video feeds only during emergencies to ensure dignified, 24/7 protection.

Personalized Copilot creates a curated, role-specific workspace that pre-loads relevant brands, ad creatives, and context-aware prompts to jumpstart discovery the moment a user logs in.

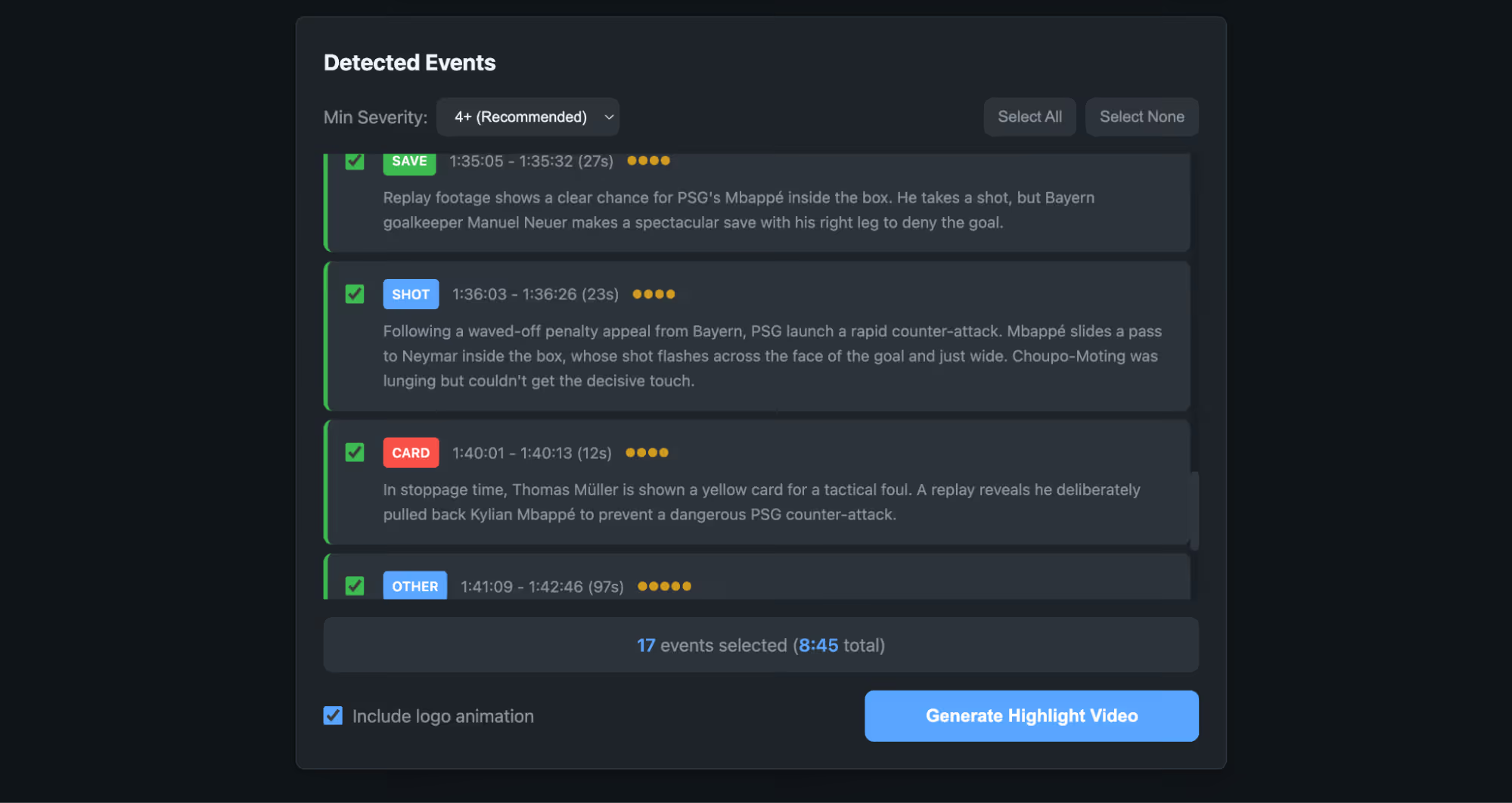

🎯Automatic Highlights Generator

This tool reimagines the fan experience by generating bespoke highlight reels on demand. Instead of scrubbing through a full football match, users select specific event types—like goals, fouls, or yellow cards, and the AI stitches them into a seamless narrative. To make the content truly global, the system integrates an AI-dubbing pipeline that automatically translates and syncs commentary into languages like Spanish, French, or Hindi.

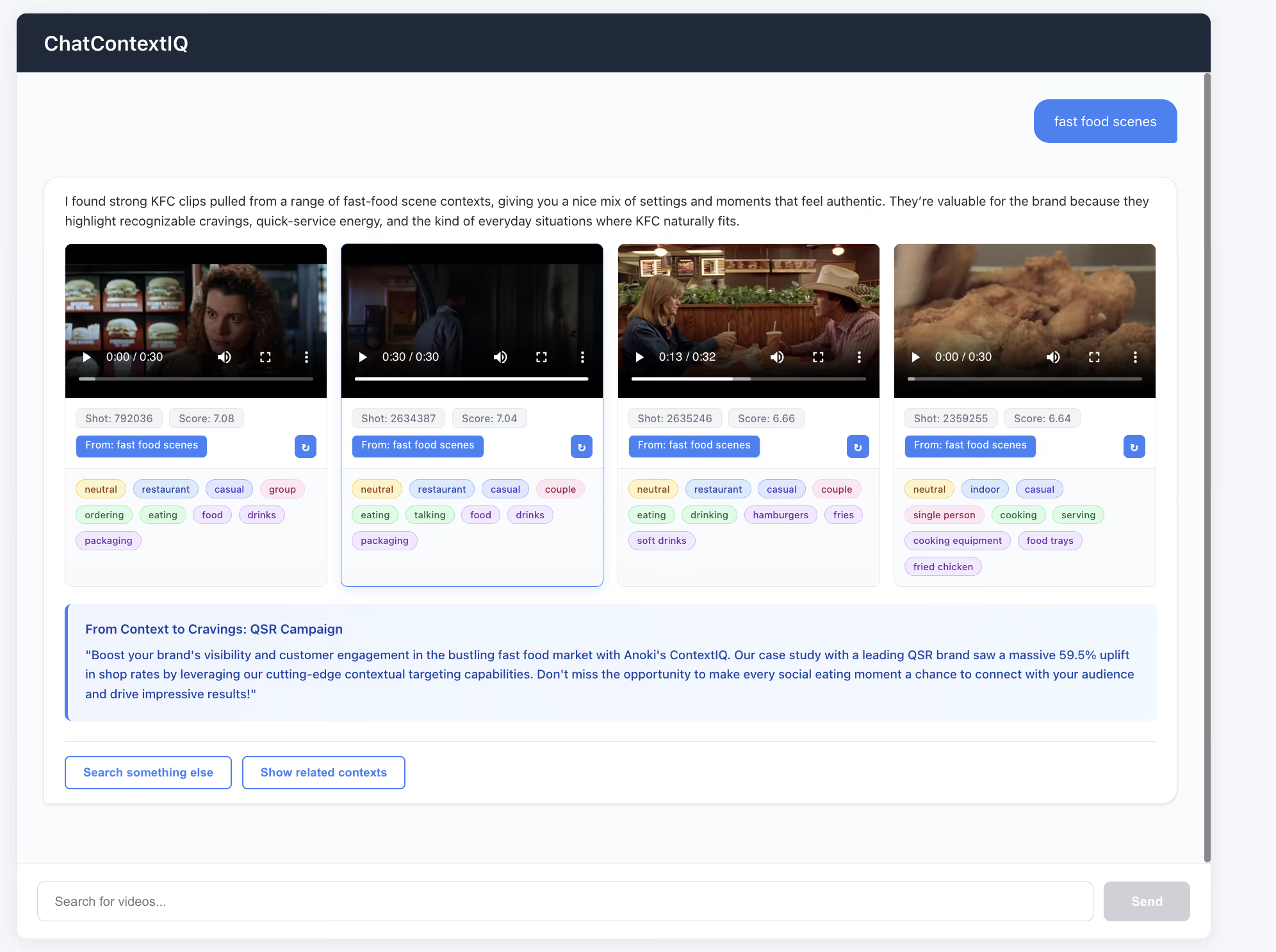

💬 ChatContextIQ

ChatContextIQ adds an intuitive conversational layer to Anoki’s ContextIQ platform, allowing marketers to harness the full power of video intelligence through natural language. By using generative AI to understand brand intent, it expands simple requests—like "burgers"—into rich semantic searches for "fast food lifestyle moments." The system leverages the platform’s deep frame-level analysis to interpret emotions, tone, and setting, effectively acting as an automated media planner. It further streamlines the workflow by surfacing relevant case studies from Anoki’s portfolio and sending smart follow-up summaries, ensuring no strategic insight is lost.

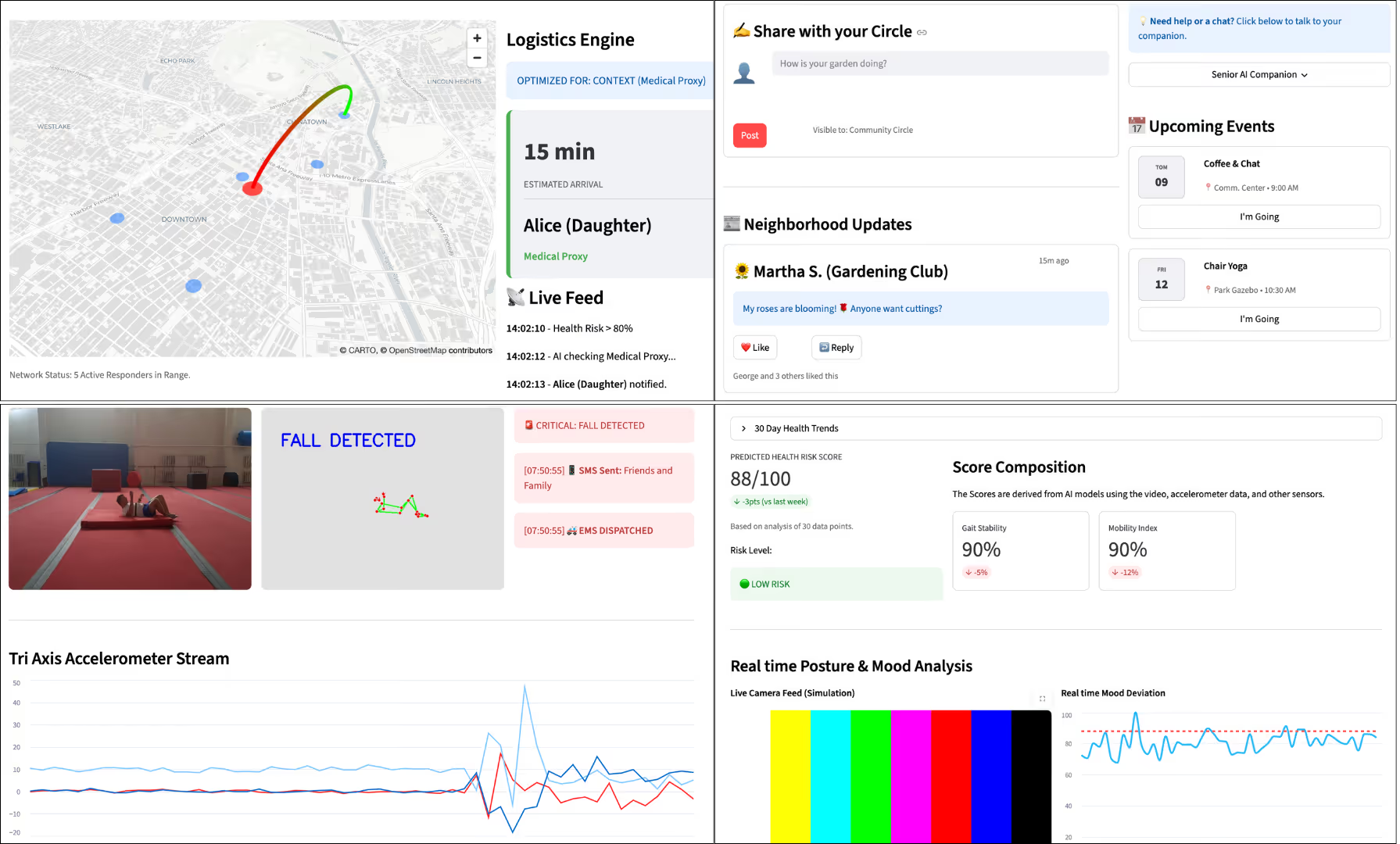

🧓 SeniorCare AI

SeniorCare AI tackles the privacy paradox in elder care by monitoring high-risk spaces like bathrooms without compromising dignity. The system uses local computer vision to track movement while keeping the video feed permanently blacked out, unlocking it only when a fall is detected. Shifting from reactive rescue to proactive care, it also analyzes longitudinal metrics like gait stability to predict health declines, while a built-in social interface keeps seniors deeply connected to their community.

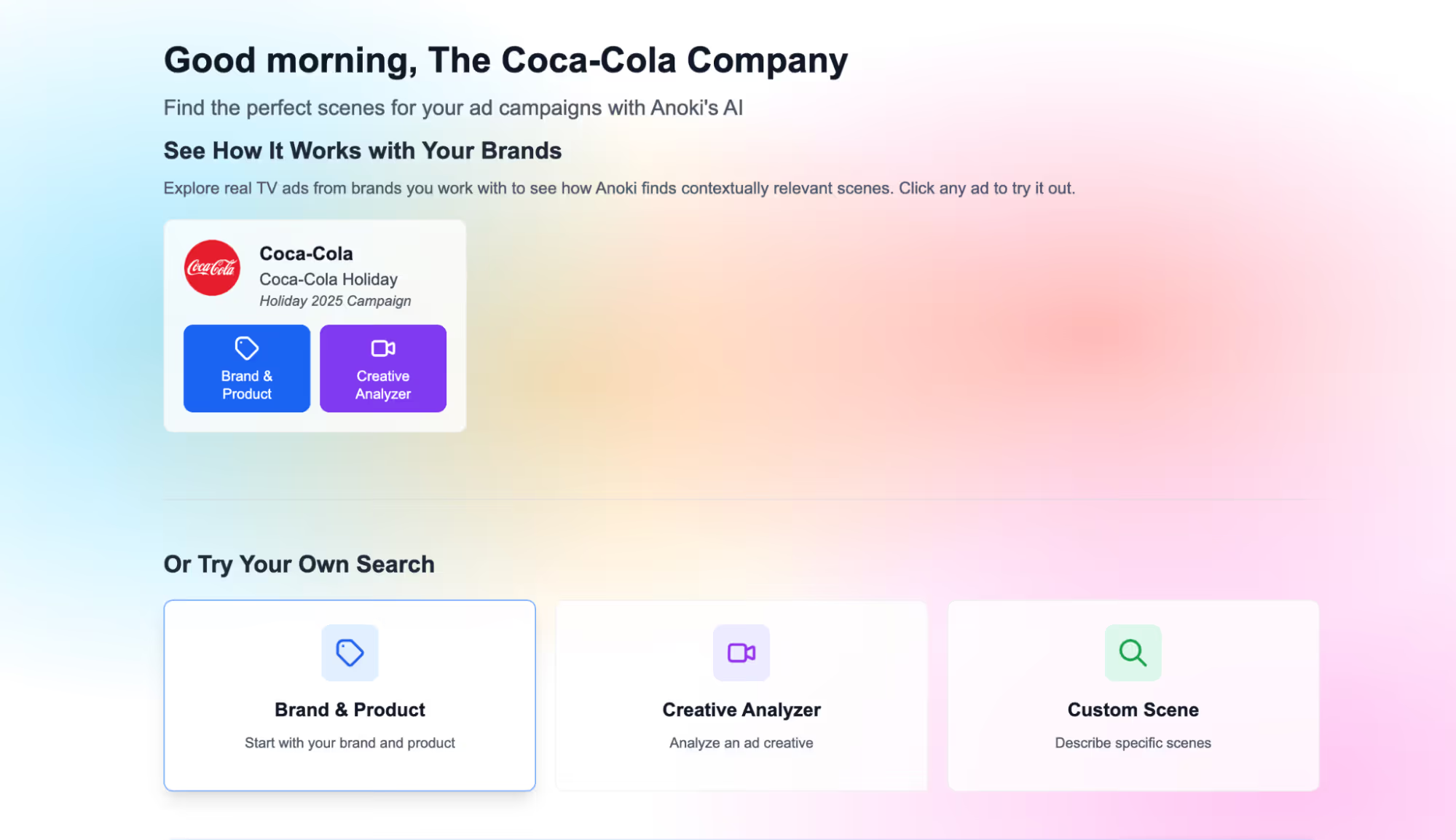

✨ Personalized Copilot

Personalized Copilot redefines the entry point to Anoki’s ContextIQ platform, replacing the friction of a blank search bar with a curated, brand-specific workspace. Upon login, the system detects the user’s role and instantly populates the screen with their specific active brands, products, and TV ad creatives. The tool powers three distinct workflows: Sales teams can generate white-labeled, logo-branded environments for bespoke prospect demos; Agencies access a consolidated portfolio view to manage multiple accounts internally; and Agency partners can isolate specific brands to present focused, client-ready views. With context-aware prompts (e.g., "Scenes with holiday gift-giving") pre-loaded alongside the visuals, it transforms the platform into a guided discovery engine that reinforces what is possible from the very first click.

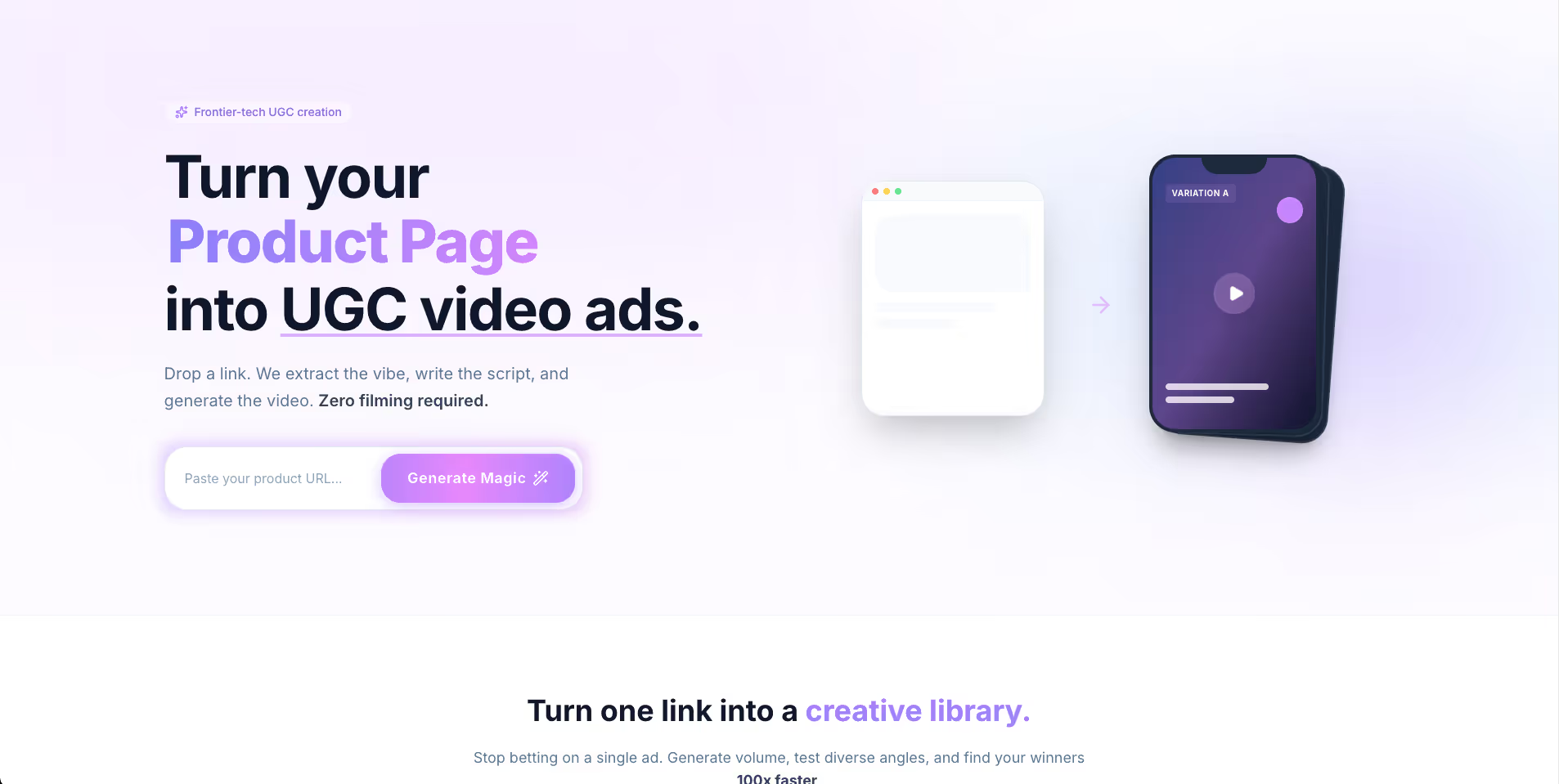

UGC Ad Creator transforms product URLs into high-performance video ads, autonomously generating scripts and visuals without filming a single frame.

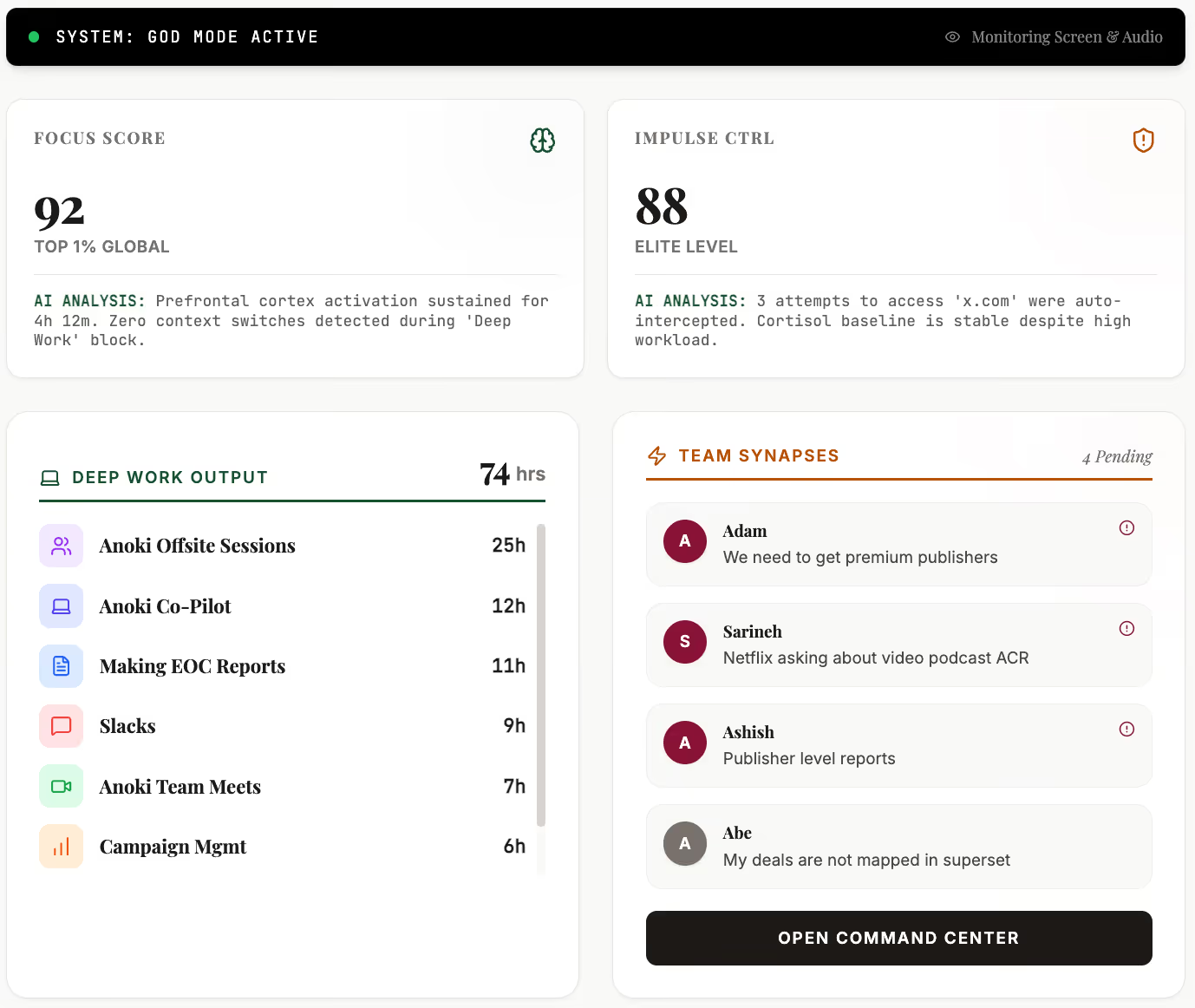

NeuroShift is an "always-on" AI operating system that protects human attention by detecting cognitive drift via multimodal signals and intervening with decisive, real-time actions.

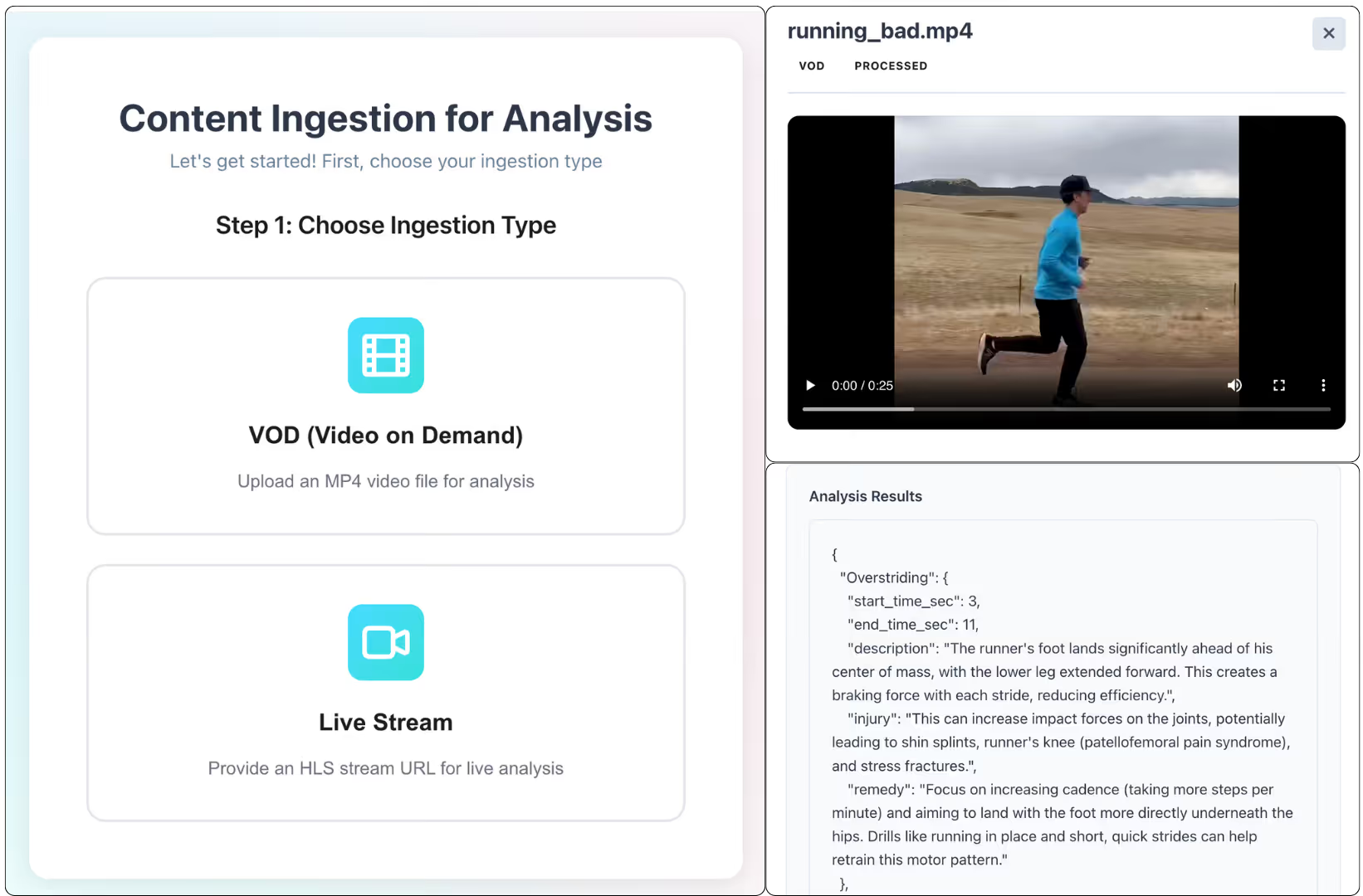

Coach’s Assistant is a unified portal that ingests diverse video formats to deliver on-demand biomechanical analysis for training and real-time event detection for live engagement.

🎬 UGC Ad Creator

Building on Anoki’s CreativeAI, this autonomous agent turns any product URL into a suite of high-performance UGC video ads. It automatically extracts brand DNA from pages like Amazon or Shopify to generate scroll-stopping scripts and visuals without filming a single frame. Designed for volume, the system produces multiple variations of hooks and personas simultaneously tailored for TikTok and Reels. It acts as an always-on creative director, allowing teams to remix attributes in real-time to scale winning creatives instantly.

🧬 NeuroShift — The Decisive OS

NeuroShift acts as an "always-on" AI operating system for human attention, treating cognitive control as critical infrastructure. Using multimodal analysis to track screen context and behavior, it detects cognitive drift in real-time and intervenes with decisive actions rather than passive suggestions. Structured around a "brutal truth" dashboard and a personalized life roadmap, it aligns daily execution with high-level strategic goals—ensuring high-performers at Anoki remain strictly accountable to their potential.

🏃Coach’s Assistant

The team built a unified coaching portal capable of ingesting diverse video formats—from recorded MP4s to live HLS streams. The system allows coaches to apply specific analytical models on demand, such as biomechanical stride analysis for runners or footwork assessment for badminton players. Extending beyond training, they also implemented real-time event detection for live matches, triggering instant alerts for goals to drive fan engagement.

📺 Contextual Advertising, the Works!

This project demonstrates an end-to-end contextual advertising workflow. The system takes an ad (file or URL), extracts the brand’s context and feel, and identifies the best matching scene moments inside a video. It then instantly generates a stitched, playable stream—showing the content preview, the ad, and the continuation. Powered by Anoki ContextIQ’s scene-level understanding, it makes contextual placement tangible by visualizing the exact experience a viewer would see.

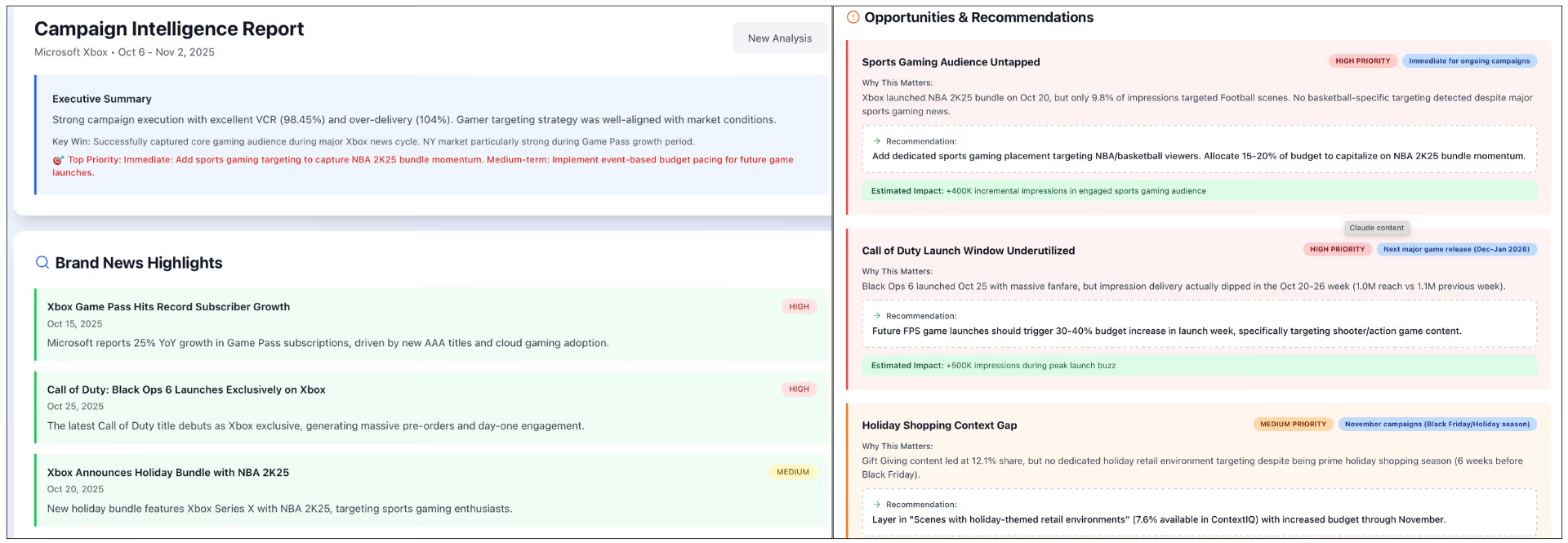

Campaign Performance IQ enhances reporting by cross-referencing ad metrics with real-world brand news, automatically identifying performance drivers and generating data-backed recommendations for future growth.

This tool integrates a custom Model Context Protocol with EMR Serverless to autonomously execute Spark queries on massive ad logs, enabling users to derive complex insights using only natural language.

📊 Campaign Performance IQ

Campaign Performance IQ transforms the traditional end-of-campaign report into a strategic business development engine. Going beyond standard CTV metrics like VCR and Reach, it correlates placement-level performance with real-world brand news and market events that occurred during the flight. The system identifies hidden performance drivers, spots missed opportunities (such as product launches), and quantifies the potential impact of future tactics. By connecting the dots between ad delivery and market context, it turns a retrospective summary into a proactive roadmap for client revenue growth.

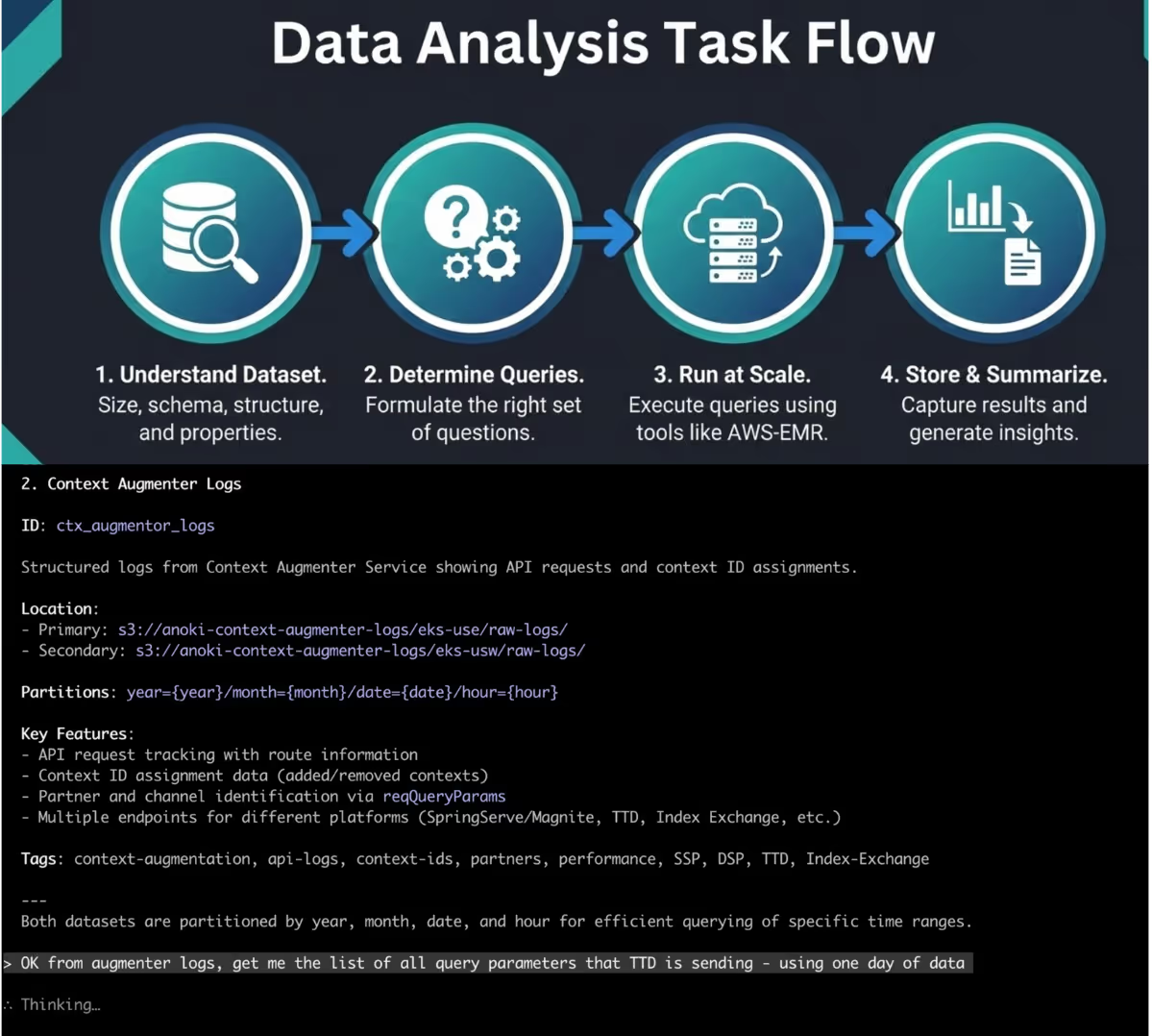

🧠 Personal Assistant for Business Analysts

To supercharge the Business Data Analysis (BDA) team, this project implements a custom Model Context Protocol (MCP) for Claude Code. It bridges the gap between natural language questions and Anoki’s massive datasets—including ad server and context augmenter logs. By enabling the AI to autonomously execute Spark queries via EMR Serverless, it transforms Claude into a fully capable analyst that can retrieve complex insights instantly, significantly reducing the technical overhead for data exploration.

🛠 Block System Debugger

Block System Debugger turns complex infrastructure debugging into a simple conversation. It allows non-technical users to query the Anoki adstack to analyze trends and pinpoint root causes for KPI drops—without writing code. By orchestrating specialized sub-agents for systems like SpringServe, it abstracts away the complexity of raw data logs, empowering anyone in the organization to diagnose system health through a natural chat interface.

The Bottom Line

This hackathon validated a core truth about the state of AI: a robust video intelligence layer has infinite horizontal potential. In just eight hours, our engineers delivered working prototypes spanning healthcare, productivity, and sports—with several teams directly extending the core capabilities of ContextIQ and CreativeAI.

The technical skill and innovative speed on display have already moved several of these projects from the hackathon floor into our live product suite. It proves that with the right team and the right multimodal infrastructure, the path from a raw idea to a deployed solution is no longer measured in months, but in days.

Ready to explore AI for CTV?

Contact UsWatch for our next newsletter.

Copyright © 2025 Anoki. All rights reserved.