Beyond Binary: Redefining Brand Safety for the Modern Media Landscape

Introduction: Why Brand Safety Matters More Than Ever

A single misplaced ad can undo years of brand-building. Imagine a family food brand’s cheerful spot running directly after a violent crime scene—the jarring juxtaposition instantly damages consumer trust and leaves a lasting negative impression. This risk isn't hypothetical. A well-known restaurant chain learned this the hard way when its lighthearted ad for drink specials ran split-screen with live coverage of an international crisis (see the moment here). The mismatch sparked ridicule and forced the brand into damage control.

These aren’t isolated incidents but symptoms of advertising in the modern media landscape. Today's streaming-first world offers a vast ocean of video content, presenting both unprecedented opportunity and risk, where a single misstep can trigger immediate public backlash and financial fallout.

This is why brand safety has evolved from a simple checkbox to a non-negotiable pillar of the entire media ecosystem, critical for everyone involved:

- For Advertisers, it’s about protecting their reputation and investment, ensuring their message appears in environments that align with their brand values.

- For Publishers, it’s about safeguarding their revenue and creating a premium, trustworthy environment that attracts and retains high-quality advertisers.

- For the Audience, it’s about the experience. A well-placed, relevant ad feels additive, while a jarring one disrupts engagement and fosters negative sentiment towards the brand.

Nowhere is this delicate balance more critical than in the world of video. Full-screen, lean-back experiences command a viewer's full attention, making any negative association more powerful and damaging. The ad is not one of many items on a page; it is the experience. And with an unvettable universe of content across thousands of apps and FAST channels, the infinite scale makes manual vetting and simple filtering impossible.

This is a reality where traditional keyword filters and broad content tags are obsolete. In video, context is everything.

The Devil in the Details: Why True Brand Safety is Deceptively Complex

On paper, brand safety seems simple: avoid the high-risk categories advertisers worry about most, like violence, adult content, and substance use.

But in reality, these broad labels hide a labyrinth of nuance. True brand safety at scale requires solving the core challenges that cause simplistic, off-the-shelf solutions to break down. Here’s why it’s so tricky:

1. The Granularity Trap: “Unsafe” is Not a Single Category

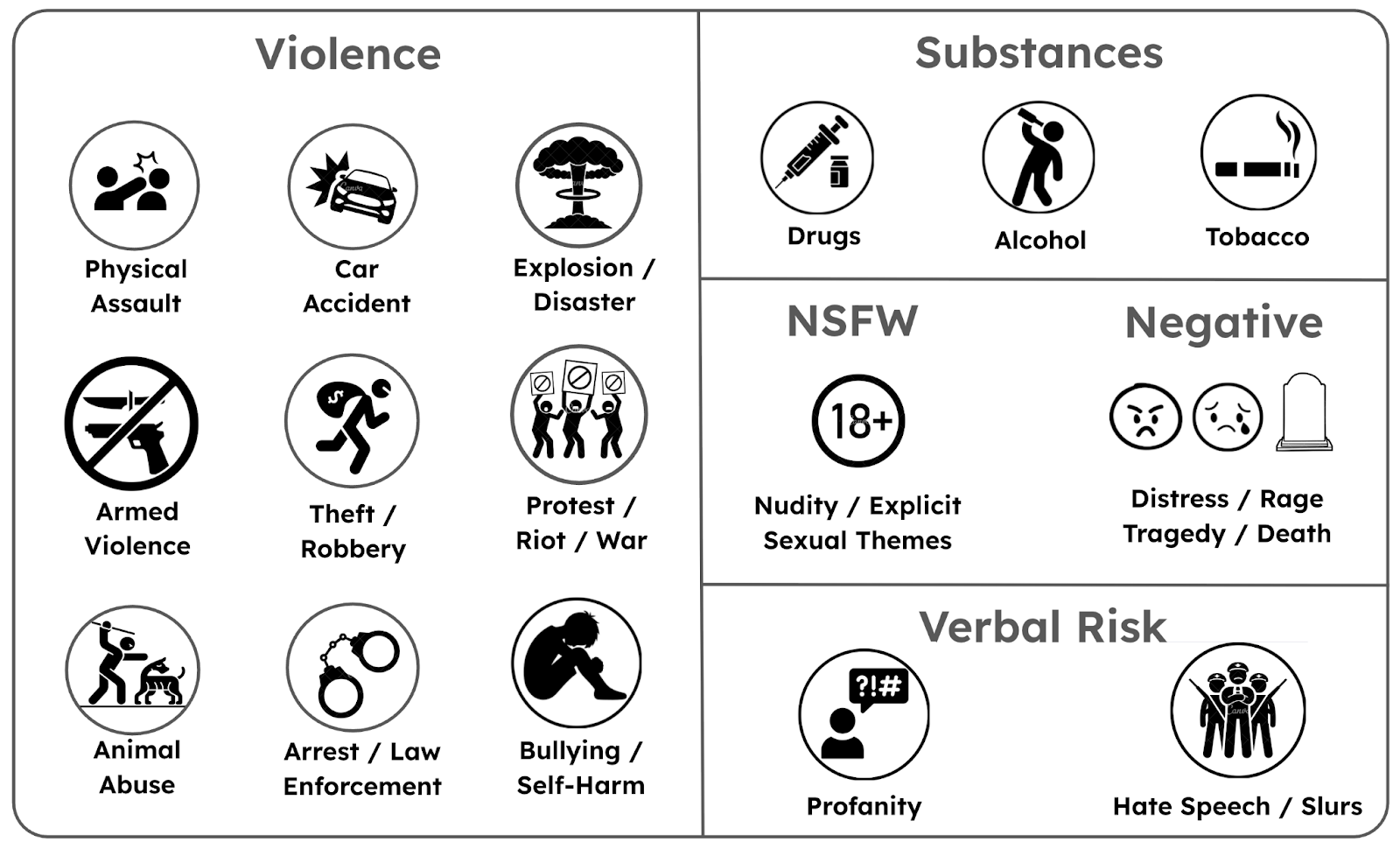

High-level categories hide enormous internal variety, making broad labels dangerously imprecise. A truly intelligent system must understand the nuances within each risk:

- “Violence” isn’t one thing—it spans everything from brawls and shootings to car accidents and terrorism.

- “NSFW” content can range from mildly suggestive dialogue and revealing attire to explicitly simulated acts and graphic nudity.

- “Substance Use” must differentiate between a celebratory glass of wine, the use of tobacco, and the depiction of illicit drug abuse.

- “Verbal Risk” requires distinguishing between mild Profanity and targeted Hate Speech.

2. Beyond Black and White: The Need for Severity

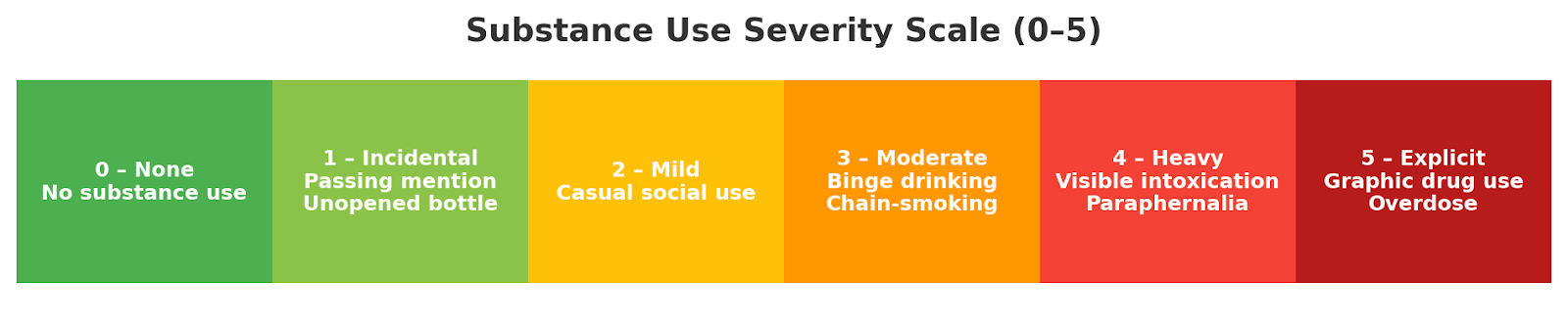

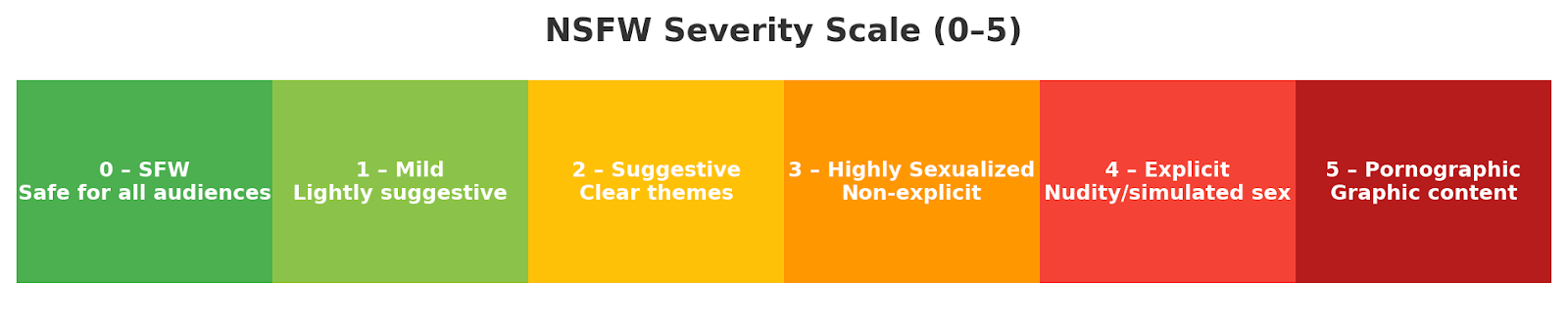

Even within the same granular subtype, not all content is equally harmful. A sitcom fender-bender is not the same as a fiery highway crash. A champagne toast at a wedding is different from a depiction of binge drinking.

Yet most solutions treat risk as binary: safe vs. unsafe. Without a severity spectrum, advertisers are forced into blunt choices: over-blocking and losing reach, or under-blocking and risking reputation.

3. The Data Bias Blindspot: When AI Learns the Wrong Lessons

The demand for granularity and severity runs headfirst into the reality of training data. Public datasets are limited in scale and narrow in scope, leading models to learn incomplete or skewed patterns, such as:

- Car accident bias: Violence dataset like XD-Violence and UCF-Crime [2, 3] over-index on crashes, teaching models to flag even harmless car scenes as a risk.

- Crowd bias: Over-indexing on riot and protest footage [2, 3] leads models to misclassify concert or stadium crowds as violent events.

- Animation bias: NSFW datasets like LSPD [4] with explicit animation but little safe animation, causing models to mislabel cartoons as unsafe.

- Skin exposure bias: Missing combat sports or beach clips, leading boxing matches and swimsuits to be wrongly flagged as sexual due to high skin exposure.

These biases aren't intentional; they are the natural consequence of using finite, curated datasets to prepare for the infinite, chaotic variety of real-world media.

4. The Infinite Variety Problem The Ferris Wheel is on Fire

Even with better data, the infinite variety and unpredictability of real-world content makes context one of the hardest problems to solve. Training datasets can never cover every possible scenario, so when models encounter new or unusual combinations in the wild, they often fail to generalize correctly.

- An amusement park is usually a safe, happy setting — until, in a disaster movie, the Ferris wheel is on fire.

- Pills on a table could mean life-saving medicine — or illicit drugs.

- A bar could be a celebration — or a fight about to erupt.

These ambiguous and unexpected combinations are everywhere in film and television. Without multimodal awareness, models can’t reliably separate safe depictions from unsafe ones.

5. The Trojan Horse: How Unsafe Content Hides in "Safe" Contexts

Finally, the most nuanced challenge in brand safety arises from an unexpected place: contextual targeting itself. Advertisers may assume that targeting a positive context—like a “romantic dinner” or a “wedding celebration”—is an inherent form of brand safety.

This creates a dangerous blindspot. The targeted content can perfectly match the context, yet still be deeply brand-unsafe. The “romantic dinner” can be a tearful breakup. The “wedding celebration” can devolve into a drunken brawl.

This is the ultimate brand safety Trojan Horse: a high-risk scene smuggled inside a perfectly relevant, supposedly "safe" package. It’s the risk you don’t see coming, precisely because you thought you were already in a safe place.

Our Solution

Confronted with the unique challenges of the media landscape - granularity, bias, severity, and the need for contextual nuance - we built a solution from the ground up, designed specifically for the complexities of the media landscape.

Our solution is a multi-layered system. The first three pillars form our core brand safety engine, which can be deployed as a powerful standalone solution. The fourth pillar shows how this engine is integrated to power safer contextual targeting.

1. A Foundation of Multidimensional Models

We deploy dedicated classifiers for each risk type: violence, NSFW, substances, profanity, and hate speech. Specialization ensures that each model develops domain expertise rather than relying on a single blunt detector.

2. Multimodal Understanding as the Input

To solve the context conundrum, our models are multimodal. They don't just look at pixels; they ingest and analyze signals from video, audio, and text together (read the related blog post). By learning from these combined embeddings, our AI understands the full story behind a scene, allowing it to detect risks that would be invisible to a system looking at visuals alone.

3. Nuanced Severity Scoring as the Output

The output is not a binary “safe” or “unsafe” label. Each model produces a numeric severity score that quantifies the level of risk. Severity is enforced during training with clear guidelines (see figure: NSFW and Substance scoring snapshot), defining what constitutes a mild, moderate, or severe infraction.

This transforms brand safety from a blunt instrument into a precision tool, allowing advertisers to set thresholds that reflect their unique brand values - e.g., one brand may tolerate mild profanity, while another blocks it entirely.

These three pillars form the core of our Brand Safety engine. This engine provides the foundational intelligence to ensure ad campaign safety across any environment.

4. Application: Safety-Aware Contextual Targeting

The final pillar integrates our Brand Safety engine directly into the media buying process for truly safety-driven contextual targeting. In this application, advertisers use contextual queries—phrases like “fashion scenes” or “family game night”—to find the precise moments they want to place their ads against [5].

Our system treats every query as a two-part challenge:

- Relevance: First, it finds all the scenes that are semantically relevant to the advertiser's targeting query.

- Safety: Then, it evaluates each of those relevant scenes using the nuanced severity scores from our expert models.

Our system then re-ranks the search results by combining both of these critical signals: relevance and safety. This two-step process is how we disarm the Trojan Horse, ensuring that the safest and most relevant moments surface to the top.

Conclusion

The era of binary brand safety is over. Today’s media landscape demands more than just blunt blocking; it requires the intelligence to separate safe from unsafe across infinite scale, nuance, and context.

Anoki’s ContextIQ brand safety engine is built to do exactly that. Powered by expert models, multimodal analysis, and severity-aware scoring, it delivers the granular control brands need to protect reputation, maintain trust, and meet suitability standards. It is a versatile solution, designed to be deployed as a powerful standalone safeguard or as the foundation for smarter contextual targeting.

The result is the confidence to advertise at scale, knowing every placement is verifiably safe and builds, rather than erodes, consumer trust.

References

[1] Dumb Applebee's Ad Disrupts CNN's Ukraine Coverage (https://www.youtube.com/watch?v=IsGbndi9eek)

[2] Wu, P., Liu, J., Shi, Y., Sun, Y., Shao, F., Wu, Z., & Yang, Z. (2020). Not only Look, but also Listen: Learning multimodal violence detection under weak supervision. In European Conference on Computer Vision (ECCV). Springer. https://roc-ng.github.io/XD-Violence/

[3] Yuan, T., Zhang, X., Liu, K., Liu, B., Chen, C., Jin, J., & Jiao, Z. (2023). Towards surveillance video-and-language understanding: New dataset, baselines, and challenges. arXiv. https://arxiv.org/abs/2309.13925

[4] Phan, D.-D., Nguyen, T.-T., Nguyen, Q.-H., Tran, H., Nguyen, K.-N.-K., & Vu, D.-L. (2022). LSPD: A Large-Scale Pornographic Dataset for Detection and Classification. International Journal of Intelligent Engineering and Systems, 15(1). https://doi.org/10.22266/ijies2022.0228.19

[5] The Multi-Modal Edge in Video Understanding with Anoki ContextIQ, https://www.anoki.ai/post/the-multi-modal-edge-in-video-understanding-with-anoki-contextiq