From Safe to Suitable: How Sentiment Analysis Unlocks Ad Effectiveness

People don't remember ads; they remember feelings. And in the world of video advertising, the feeling of the content your ad follows becomes the feeling associated with your brand. A scene of triumph can make your message feel inspiring. A scene of tension can make it feel intrusive.

Brand safety is the non-negotiable foundation of modern advertising, ensuring a message never appears next to harmful content. But to move from simply safe to truly suitable and effective, we must add the next layer of intelligence: emotional context. Even when an ad is placed in perfectly "safe" content, a mismatch in emotional tone can undermine your campaign's goals.

This is the next frontier Anoki’s multimodal sentiment analysis was built for.

Our system augments essential brand safety protocols with a deep understanding of the emotional DNA of every video segment. It addresses two critical needs:

- Proactive Prevention: It works alongside brand safety tools to identify and filter out content with strongly negative sentiment, protecting your brand from emotionally inappropriate moments. For instance, a joyful toy commercial might technically be “safe” next to a scene of a child saying a painful goodbye to a parent, but the sadness would hollow out the ad’s intended message. Similarly, a financial services ad about “trust” feels misplaced if it follows a heated reality show argument.

- Intelligent Alignment: It actively seeks out “on-tone” scenes, ensuring your message is delivered when the viewer is most receptive to its emotional core. Imagine that same toy ad appearing after a scene where kids are laughing together, or the financial services spot running after a moment of reconciliation and relief. In both cases, the ad doesn’t just avoid harm, it benefits from emotional momentum.

The outcome is a clear competitive advantage: fewer jarring adjacencies, more suitable inventory, and ad creative that lands exactly as intended: aligning message, moment, and mood.

How We Understand a Scene's True Sentiment

To solve the problem of emotional context, we can't just analyze words on a page. We need to understand video content the way a person does—by interpreting a combination of signals at once. At its core, multimodal sentiment analysis looks at multiple sources of information - visuals, audio, and text - to capture a scene's complete sentiment profile. This holistic method is far more accurate because it mirrors how we humans process the world.

Anoki analyzes three key layers to determine a scene's true "vibe":

- Visual Cues (What We See): We analyze on-screen actions and expressions, from a subtle smile to an intense chase scene, as well as the film's coloring and lighting.

- Audio Cues (What We Hear): Our system listens to the tone of voice and analyzes the musical score. Is it a cheerful melody or an ominous soundtrack?

- Textual Cues (What We Read): We analyze the dialogue and any on-screen text for explicitly positive or negative language.

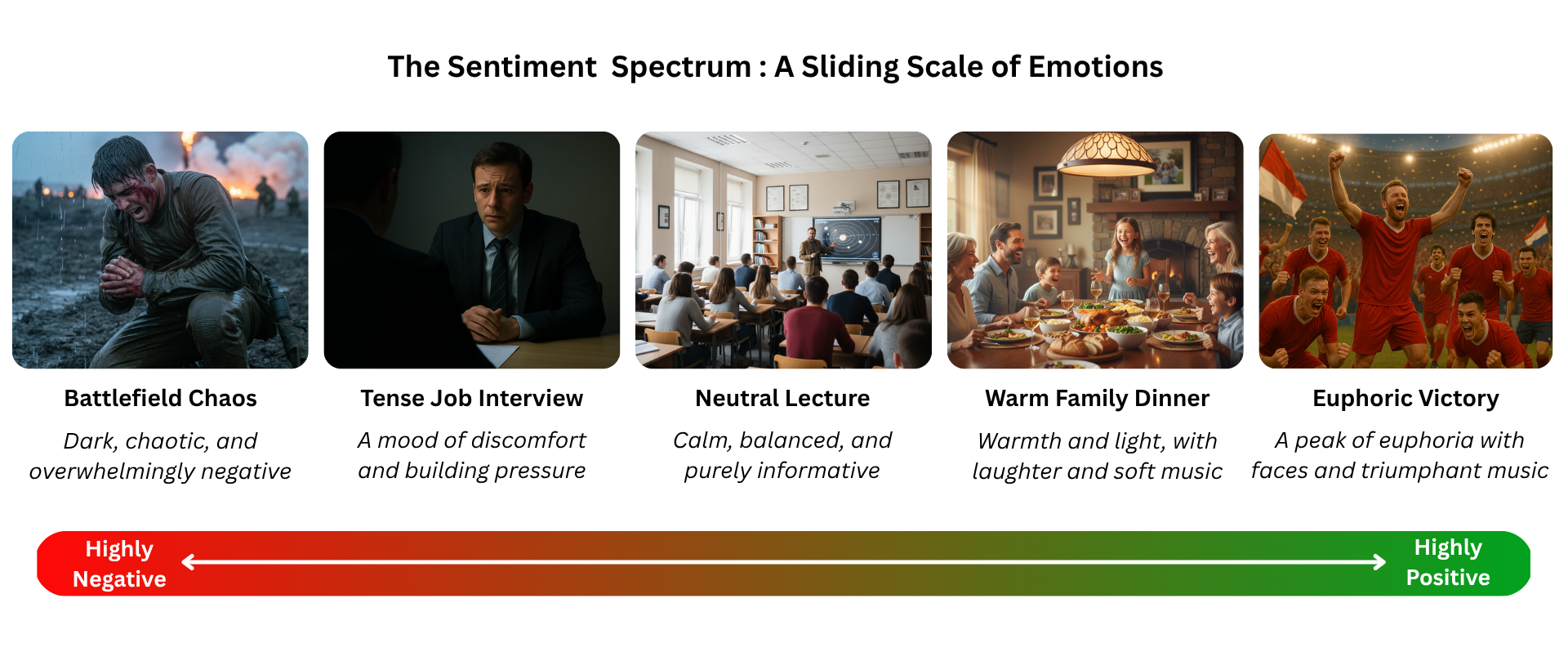

Instead of boxing moments into “positive,” “neutral,” or “negative,” we grade them on a continuous spectrum from 0.0 to 1.0. This lets us capture how deeply an emotion is felt, not just what it is.

This spectrum doesn’t just measure emotion. It gives advertisers and storytellers surgical control: aligning messages with joy, steering clear of violence, and placing content with precision.

The Science of Sentiment: Navigating a Complex Landscape

Teaching an AI to understand human emotion is incredibly complex, as it requires grappling with the significant hurdles of subjectivity and mixed emotions. By analyzing our massive dataset, we can see exactly why these challenges demand a sophisticated, data-driven solution.

The Challenge of Subjectivity

Sentiment is not a fact; it's a personal judgment. A scene might be labeled "neutral" by one person but "slightly positive" by another, creating a major obstacle for training an AI. Fig2, which compares two of our expert annotators, shows this challenge in action.

The bright diagonal line in the plot shows that most clips receive consistent sentiment scores. Importantly, where annotators disagree, the differences are small and systematic, typically involving confusion between neighboring categories like ‘Medium Negative’ and ‘High Negative’. This reflects a disagreement about emotional intensity, not the underlying emotion. Such predictable nuance is exactly why our consensus method is so effective at producing stable and reliable sentiment scores.

The Challenge of Mixed Emotions

When we look at sentiment across video content, one truth becomes clear: most scenes aren’t purely positive or purely negative. Instead, they sit somewhere in between, leaning mildly positive or mildly negative, often containing hints of both. Truly extreme emotions, whether euphoric or devastating, are surprisingly rare.

This matters because a blunt binary system would flatten all this nuance. Labeling both a bittersweet farewell and a tense job interview as simply “Negative” erases the subtle but important differences in tone. Fine-grained sentiment scoring is what makes it possible to capture these shades and apply them intelligently.

The following distributions illustrate this balance: scenes cluster around the middle of the sentiment spectrum rather than the extremes, showing how rarely content expresses pure positivity or negativity.

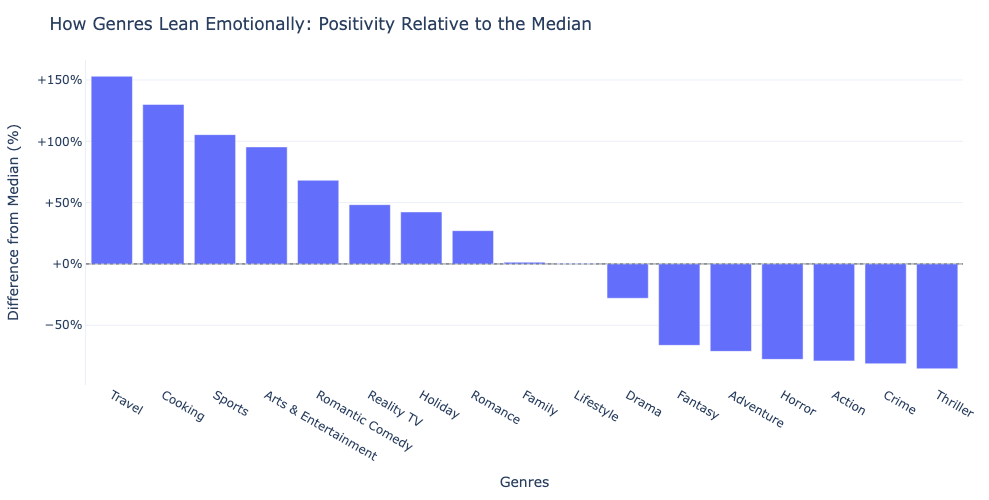

The pattern becomes even more interesting when viewed across genres. While some categories tend to evoke more upbeat emotions and others lean toward the intense or somber, no genre is emotionally uniform. Travel or Cooking content may generally feel uplifting, yet they still include moments of tension or frustration. Conversely, more intense genres such as Crime or Horror often contain intervals of relief, resolution, or even joy. This interplay of highs and lows captures the challenge of mixed emotions, where contrasting feelings coexist within the same narrative space.

By indexing sentiment on a continuous spectrum and benchmarking it against each genre, our system respects these nuances. Advertisers can avoid unsuitable adjacencies without discarding entire categories, unlocking broader inventory and achieving more precise emotional alignment.

Understanding why emotions mix naturally leads to a deeper question: which sensory signals drive these perceptions?

Deconstructing the Senses: How We Solve the Complexity

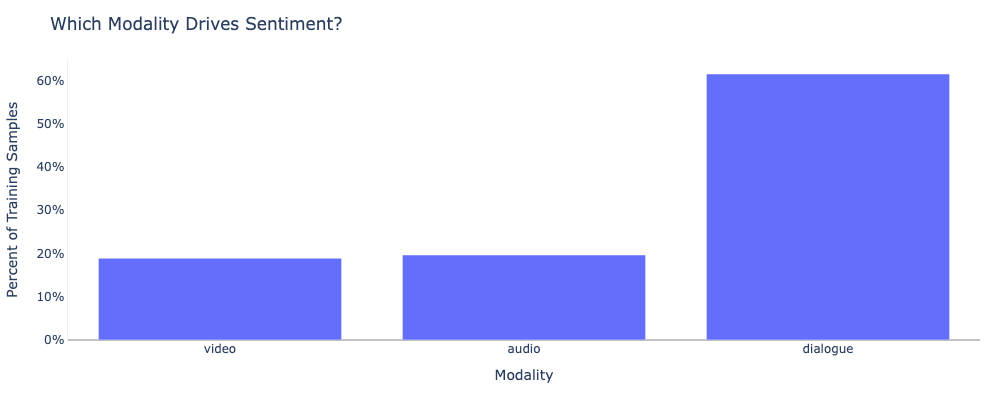

To navigate the challenges of subjectivity and mixed emotions, we must deconstruct every scene into its core components. We analyzed which "sense" - dialogue, audio, or video - was most influential in determining a clip's final sentiment score.

The Deciding Factor

As the chart shows, dialogue is the most frequent dominant signal. This is logical, as what people say is often a direct path to their feelings. However, in a significant number of cases, the audio or the visuals were the deciding factor.

Let’s look at what each sensory channel contributes when it takes the lead.

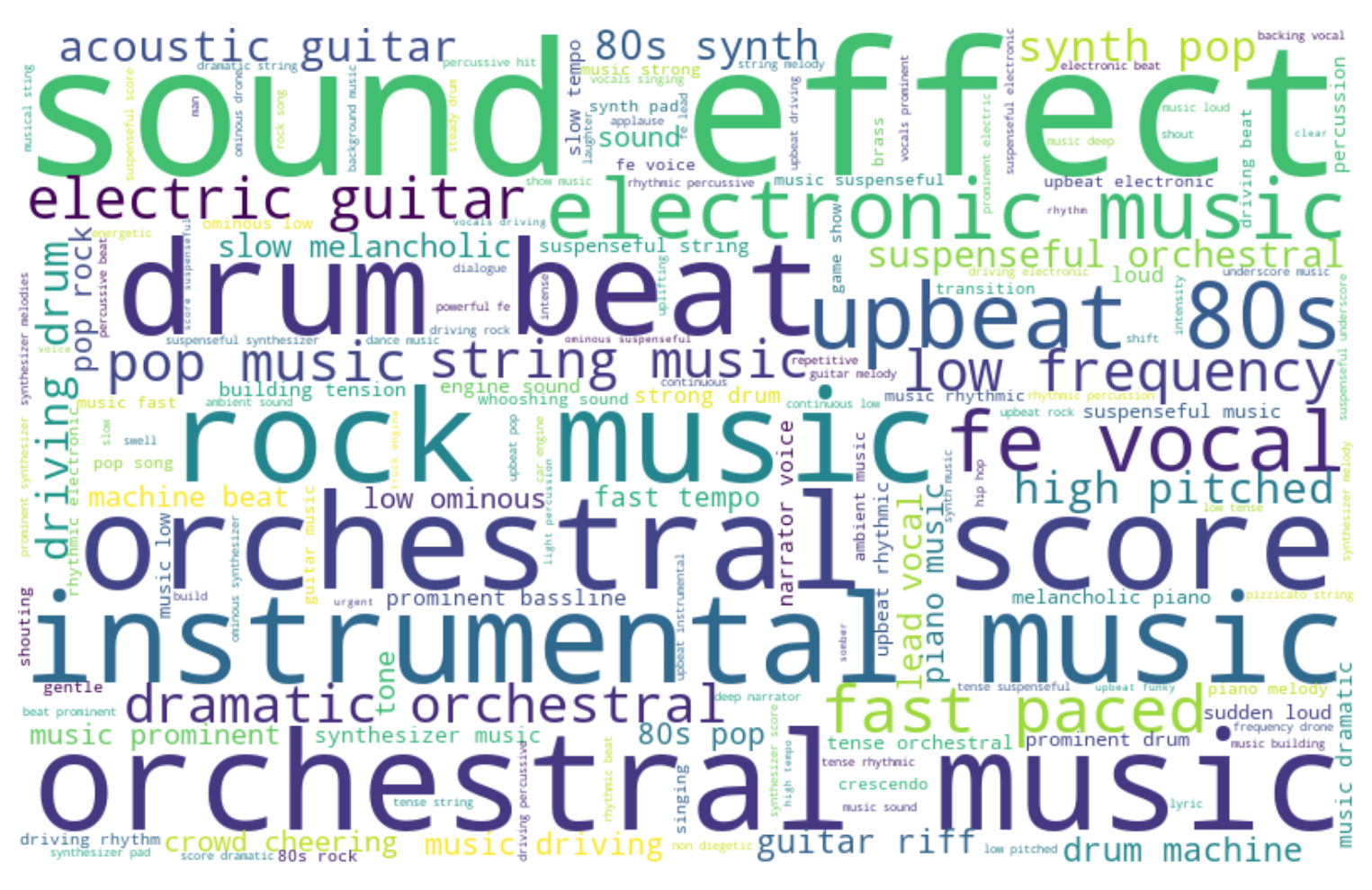

What We Hear: A Symphony of Sound

When audio is the key emotional driver, our analysis captures a rich tapestry of specific genres (orchestral score, rock music), instruments (drum beat, electric guitar), and powerful emotional descriptors (suspenseful, upbeat, dramatic). This is the sonic world that can turn a visually neutral scene into one of intense joy or terror.

What We See: A Picture of Emotion

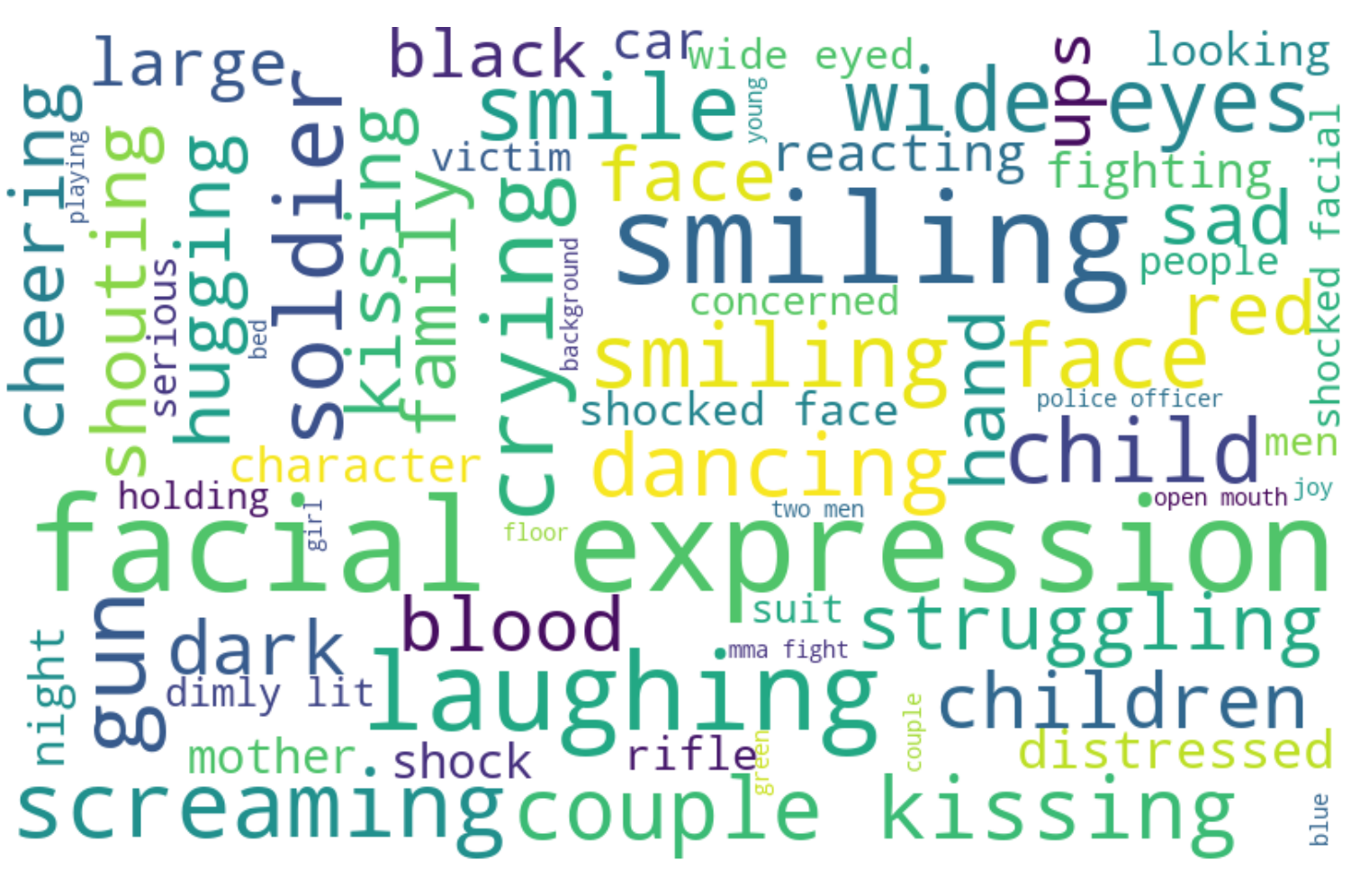

Similarly, when visuals dictate the mood, the strongest signals emerge from human presence and expression: facial expression, smiling, crying, wide eyes, hugging, and kissing. Our system learns to recognize not only direct emotional displays, but also contextual cues like screaming, struggling, cheering, and distressed that hint at intensity or conflict. These subtle and overt indicators form the backbone of how vision contributes to storytelling.

Dialogue may carry the literal meaning, but the emotional richness comes alive through the blend of audio and visual cues working together.

Under the Hood: The Science Behind Our Sentiment Score

Anoki's reliability isn't accidental; it's engineered. At its heart is a sophisticated, multi-layered process designed to deconstruct and understand sentiment with industry-leading precision.

It begins with our data. Our models are forged in a crucible of content, combining massive open-source datasets with our unique in-house video library. To ensure our "ground truth" is flawless, we use a consensus-based approach where expert AI and human annotators label each clip across the full spectrum of sentiment—from ‘High Negative’ to ‘Neutral’ to ‘High Positive’. These nuanced opinions are then averaged to form a final, balanced score that captures every shade of feeling.

When analyzing a new video, our system interrogates it through three crucial modalities simultaneously:

- Visuals: We don't just see pixels; we see meaning. Our system computes rich visual embeddings using state-of-the-art vision models to understand a scene's composition. It then goes a step further, generating specific scores for potent visual cues—like the warmth of a smile versus the gloom of a stormy landscape—fusing both sets of data into a comprehensive visual profile.

- Audio: We generate powerful audio embeddings that capture the soul of a scene’s soundtrack. These are enriched by explicit scores for impactful audio events, from the joy in a burst of laughter to the tension in a dissonant musical cue, ensuring we hear the emotion, not just the noise.

- Text: We don't just read words; we analyze intent. The dialogue and on-screen text are subjected to a battery of tests, including scores from transformer-based sentiment models, analysis of explicit emotions (happy, sad, angry), profanity detection, and even subtle hate speech identification.

Finally, a synthesis engine intelligently weighs every signal—visual, audio, and textual—to render a holistic judgment. It analyzes entire scenes, not just fleeting snapshots, because true sentiment unfolds over time. The result is a single, definitive sentiment score you can trust.

The Future of Advertising is Emotionally Aware

The most successful brands won't just be the ones with the best creative; they'll be the ones that respect the viewer's emotional journey. The real opportunity isn't just avoiding the wrong moments, but also finding the perfect ones.

Anoki is built for this future, providing the nuanced understanding needed to create more effective, resonant, and respectful advertising.