Exploring AI Tools for Design: Wins, Fails & Lessons

Lately, I’ve been diving deep into AI tools to speed up and elevate parts of my design process, especially for video, sound, and graphics. What started as a few experiments quickly turned into a full-blown exploration of what's possible (and what’s still not quite there). Here’s a breakdown of some of my experiences using various AI platforms, what worked, what didn’t, and where the magic (or frustration) happened.

Video Generation with RunwayML

Generating a video from a still image: I needed a video clip of people watching TV for a custom demo we were producing as part of a big, industry-first partnership. The feedback I got from our partners was that the people in the stock clip looked too fidgety, too much head-turning, too much movement.

Without AI, I would have had to dig through more stock libraries and run a whole new round of design reviews. Instead, I tried something different: I grabbed a single frame from the clip we already had and dropped it into Runway. My prompt was simple: "Use this still to make a 5-second video."

Surprisingly, it nailed it. The result was exactly what I needed, and the process was smooth. There are AI imperfections for the TV anchors, but that wasn't an issue for this project because I was going to drop in other content to be viewed on the TV screen.

Verdict: Impressive. Quick, easy, and exactly on brief.

Animating an Illustration with RunwayML

I needed a short video to feature as the hero of an important content piece showcasing how Anoki’s multimodal AI analyzes video. My starting point was a beautiful illustration created by our marketing team designer, Deepa Shah, for the PDF version of this content.

Without AI, I would have broken apart elements of Deepa’s illustration and built a short animation in Adobe After Effects. That would’ve meant sourcing live-action footage, layering it with illustrated motion graphics, and spending hours to bring to life the complexity of multimodal AI video analysis. Instead, I turned to Runway. I uploaded Deepa’s illustration and prompted: “Animate this.”

The first result? Beautiful. The animation looked smooth, polished, and visually on-brand.

But when I shared it with the team, they asked for more: they wanted me to add scrolling numbers and highlight more boxes for object detection. That seemed like a simple tweak, so I prompted Runway: “Add scrolling numbers and boxes around objects.”

But this time the result was unexpected. Runway altered elements I hadn’t asked it to touch, and the output looked completely different from the original. Worse, I couldn’t “undo” those changes or guide them back, no matter how many times I re-prompted.

Verdict: Runway was visually impressive at first, but not flexible enough for iterative edits. Great for a one-shot wow moment — tricky if you need tight creative control. Here’s the initial video Runway created live on Anoki’s site.

Audio Editing with Adobe Audition vs soundtrack creation with Suno

Adjusting soundtrack duration: I needed a soundtrack to fit the exact length of a demo video for one of our products. The challenge was that the final duration of the video ended up six seconds longer than the team had originally approved, and the ending of the track needed to land precisely on the outro logo sequence.

Without AI, my approach would have been to manually edit the audio — duplicating sections, adding filler, or fading in and out until it matched the timing. That process is time-consuming, and it comes with a lot of trial and error and searching to find the right seams to stitch everything together without breaking the musical flow.

Instead, I tried Adobe Audition. I dropped in the track and used its Remix feature, setting the exact duration I needed and letting the tool automatically stretch the music to fit. The outcome was almost perfect; the track extended its duration by 6 seconds without lowering the pitch at all. It did slow down the tempo a tiny bit, but the tempo it provided was still ok.

Despite this positive outcome, I did find the tool was also a bit rigid: I couldn’t fine-tune the length down to the exact second without manually adding a filler beat.

I could have also used Suno, a generative AI music tool, to create a completely new soundtrack with full flexibility on duration, and I did try it. But I quickly realized that making a really good track still takes time and artistry, even with AI’s help. In the end, using the existing track and adjusting it in Audition was faster and more practical.

Verdict: Adobe Audition is great for quick, near-perfect timing adjustments, while generative AI tools like Suno offer flexibility but still require creative effort. For speed and reliability, sticking with a proven track won the day. Checkout the final result. The sound track plays in the hero video here.

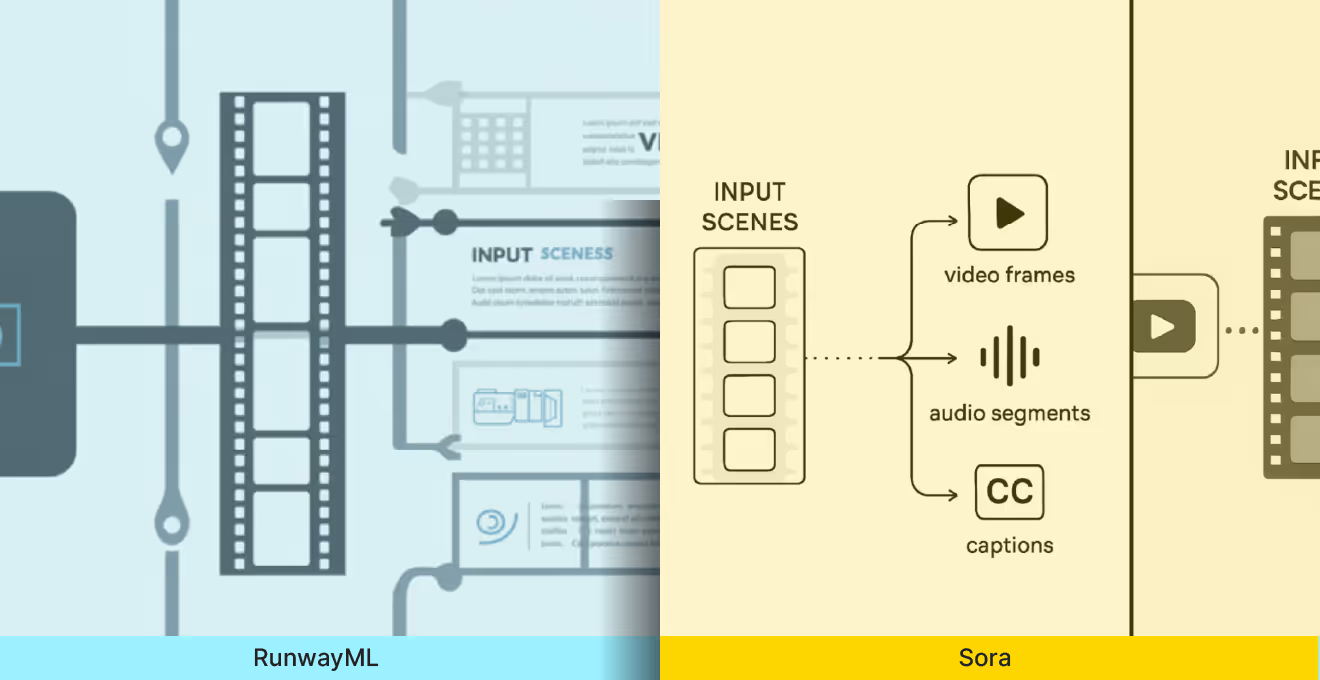

Infographic Animation Attempts with RunwayML and Open AI's Sora

Animated infographic from verbal prompt: I wanted to create an animated infographic of the Anoki AI platform. My idea was to visually explain how our system processes video into frames, audio, and captions. Without AI, I would have mocked this up in Figma or Illustrator, then animated it in After Effects — a process that would take hours to storyboard, design, and render.

Instead, I turned to generative AI to see how it would handle a clear, structured prompt. With ChatGPT’s help, I wrote out a detailed description: “Make an infographic: On the left, a box with a video icon, a dotted line goes out of that box into a vertical set of 5 boxes like a film strip, titled ‘input scenes.’ From that strip, arrows branch out into three boxes labeled ‘video frames,’ ‘audio segments,’ and ‘captions,’ each with an icon. Animate a dot traveling along the arrows so that as it reaches each icon, the element grows in size, then returns to normal. This should be a 10-second video.”

I tested the prompt in Runway first. The result? Something… but not what I asked for. The output had extra text, a messy layout, and a cluttered feel that lost the simplicity of the concept. I then tried Sora with the same prompt. It did better than Runway: the infographic felt slightly more aligned, but still missed the mark.

Neither tool could take the structured, technical description and turn it into clean, purposeful visuals. Since both apps created a static image first and were then going to animate it, I didn't take it further and left it there. There was no point animating an infographic that didn't work.

Verdict: Generative AI tools struggled to execute a precise, infographic-style animation from a purely verbal prompt. They produced something, but not something useful. For now, when it comes to structured, intentional motion graphics, traditional design tools still win.

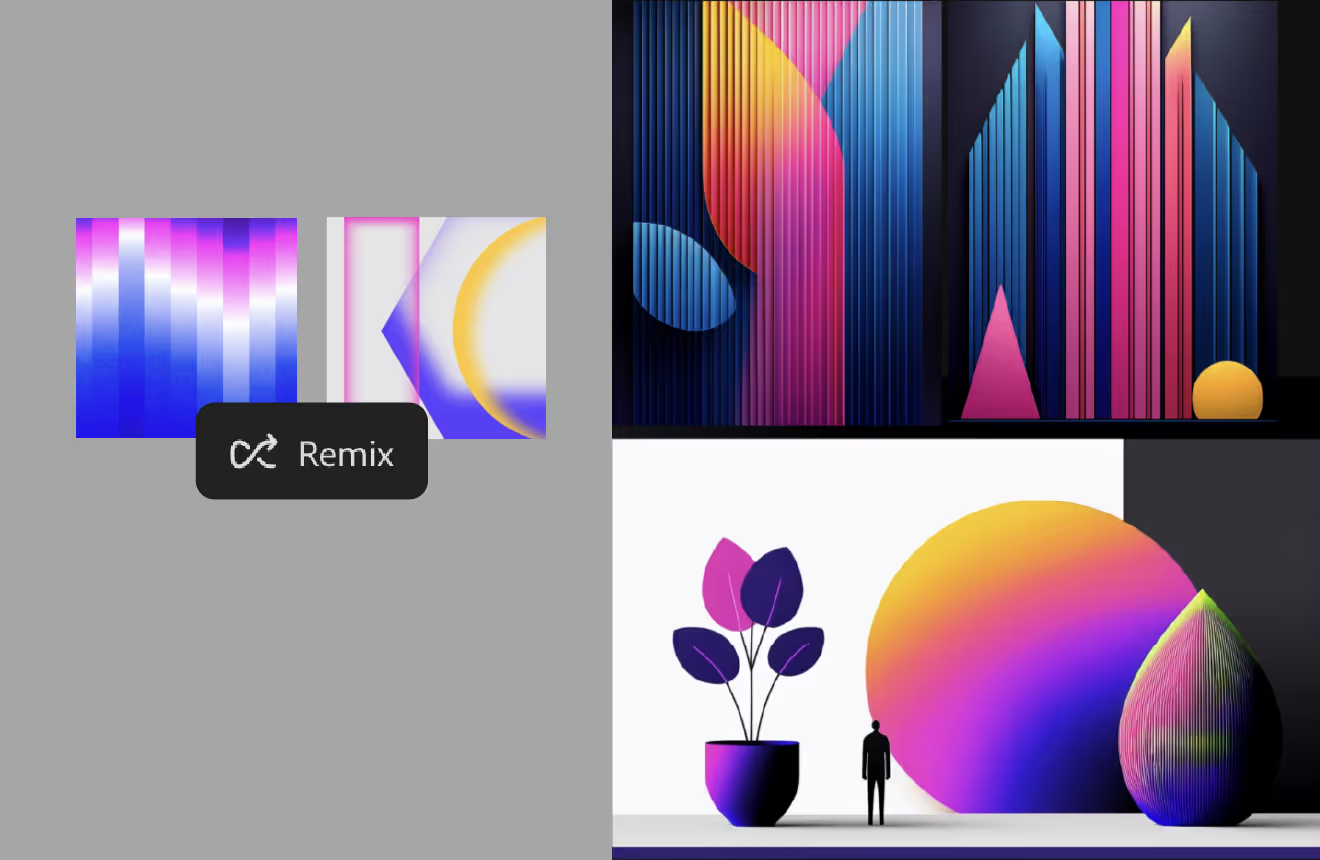

Mixing Reference Images with Adobe Firefly

I needed to create a set of abstract background images for our new case studies web page. This was also an exercise in expanding our brand assets: building a library of visuals we could reuse across the case studies section, social posts, and other design needs. Without AI, my approach would have to source stock imagery, then edit, crop, and manipulate the visuals to match our brand look and feel.

I thought this was a perfect opportunity to see how generative AI could work as a creative partner. After learning about Adobe Firefly’s “mixing” capabilities, I was excited to give it a try. I picked a few visual references and prompted Firefly to mix them, hoping for inspiring and surprising results.

I was indeed surprised — but not in a good way. Firefly veered so far away from the reference material that the outputs weren’t relevant or useful. In the end, I went back to tried-and-true sources like iStock, where I could choose raw images and manipulate them myself to get the exact look I wanted. This worked well and gave us a set of consistent, polished visuals. Here are some of the case study images we’re now using.

Verdict: Firefly has creative potential, but isn't reliable yet.

Presenter video from a Still Photo with HeyGen

I wanted to create a short social video of a presenter telling a story, but I didn’t have any video footage, just a photo and a script. Without AI, my only option would have been to organize a full video shoot: booking a presenter, setting up lights and cameras, recording multiple takes, and then editing everything together. That would have been time-consuming and expensive for such a short piece of content.

Instead, I turned to HeyGen. I uploaded a still photo of Anoki’s head of sales, Adam Paz Yener, added the script, and selected a voice. I tried a British accent first (just for fun), then switched to American — both sounded great. To make the delivery more dynamic, I also prompted HeyGen to emphasize key parts of the script — specifically the question and the solution — so it wouldn’t feel flat or robotic.

The outcome was impressive: a lifelike talking-head style video that worked well for social. It wasn’t indistinguishable from a real recording — there’s still a bit of that “AI avatar” feel — but it was fast, polished, and effective. The AI added subtle gestures like eyebrow raises, small hand movements, and a natural-looking smile.

Verdict: Engaging, polished, and shockingly realistic from just a still image. HeyGen is a powerful shortcut for generating simple presenter-style videos when you don’t have footage. Great for speed and budget, but not a full replacement for live filming if you need authentic nuance.

Thoughts for now

Exploring AI tools as a designer feels a bit like panning for gold. Some tools, like Runway and HeyGen AI, genuinely accelerated my workflow and delivered pro-quality results when used within their limits. Others offered flashes of brilliance but fell short on control and consistency.

In reflecting on my own process, I’ve become aware of a creeping tendency towards over-reliance on these tools, trying to submit loosely formed prompts based on initial ideas, hoping that the algorithm will compensate with insight or inspiration. But it turns out that AI rarely thrives in a creative vacuum.

I’ve found these tools shine most when I’m already in a creative flow and looking to collaborate. That’s when they feel truly powerful and delightful. The key: knowing when not to use AI is just as important as knowing when to lean on it.

This is just the start of my journey. Looking forward to reporting back with discoveries, insights, and tips.

(Written with the help of our AI assistant, Aria)